5 Confidence intervals

From the parameters’ ML estimates, we generate new data and re-estimate the parameters 1000 times.

From that list of new estimates we can get the 2.5th and 97.5th percentiles and get 95% confidence intervals for the parameters.

We show below how to derive confidence intervals using TMB, a likelihood profile based method, and parametric bootstrap, based on the 2 state Poisson HMM estimates.

For all three methods, we require a model, so we generate a 2-state Poisson HMM based on the TYT dataset

# Load TMB and optimization packages

library(TMB)

# Run the C++ file containing the TMB code

TMB::compile("code/poi_hmm.cpp")

## [1] 0

# Load it

dyn.load(dynlib("code/poi_hmm"))

# Load the parameter transformation function

source("functions/utils.R")

load("data/tinnitus.RData")

# Model with 2 states

m <- 2

TMB_data <- list(x = tinn_data, m = m)

# Generate initial set of parameters for optimization

lambda <- c(1, 3)

gamma <- matrix(c(0.8, 0.2,

0.2, 0.8), byrow = TRUE, nrow = m)

# Turn them into working parameters

parameters <- pois.HMM.pn2pw(m, lambda, gamma)

obj_tmb <- MakeADFun(TMB_data, parameters,

DLL = "poi_hmm", silent = TRUE)

# The negative log-likelihood is accessed by the objective

# attribute of the optimized object

mod_tmb <- nlminb(start = obj_tmb$par, objective = obj_tmb$fn,

gradient = obj_tmb$gr, hessian = obj_tmb$he)

mod_tmb$objective

## [1] 168.53615.1 Wald-type confidence

intervals based on the Hessian

Now that we have a model estimated via TMB, we can derive Wald-type (Wald 1943) confidence intervals.

For example, the \((1 - \alpha) \%\) CI for \(\lambda_1\) is given by \(\lambda_1 \pm z_{1-\alpha/2} * \sigma_{\lambda_1}\) where \(z_{x}\) is the \(x\)-percentile of the standard normal distribution, and \(\sigma_{\lambda_1}\) is the standard error of \(\lambda_1\) obtained via the delta method.

First, we require the standard errors. We can retrieve them from the MakeADFun object.

The standard errors of the working parameters tlambda and tgamma can be retrieved without needing to add ADREPORT in the C++ file.

However, it is usually more interesting to access the standard errors of the natural parameters lambda, gamma and delta. This requires adding a few lines to the C++ file to produce these standard errors, as detailed in [Getting started with a linear regression].

Be careful: adrep lists gamma column-wise.

adrep <- summary(sdreport(obj_tmb), "report")

adrep

## Estimate Std. Error

## lambda 1.63641100 0.27758296

## lambda 5.53309576 0.31876147

## gamma 0.94980209 0.04374676

## gamma 0.02592204 0.02088688

## gamma 0.05019791 0.04374676

## gamma 0.97407796 0.02088688

## delta 0.34054200 0.23056437

## delta 0.65945800 0.23056437Standard errors for \(\hat{{\boldsymbol\lambda}}\):

rows <- rownames(adrep) == "lambda"

lambda <- adrep[rows, "Estimate"]

lambda_std_error <- adrep[rows, "Std. Error"]

lambda

## lambda lambda

## 1.636411 5.533096

lambda_std_error

## lambda lambda

## 0.2775830 0.3187615Standard errors for \(\hat{{\boldsymbol\Gamma}}\)

rows <- rownames(adrep) == "gamma"

gamma <- adrep[rows, "Estimate"]

gamma <- matrix(gamma, ncol = m) # Convert to matrix

gamma_std_error <- adrep[rows, "Std. Error"]

gamma_std_error <- matrix(gamma_std_error, nrow = m, ncol = m)

gamma

## [,1] [,2]

## [1,] 0.94980209 0.05019791

## [2,] 0.02592204 0.97407796

gamma_std_error

## [,1] [,2]

## [1,] 0.04374676 0.04374676

## [2,] 0.02088688 0.02088688Standard errors for \(\hat{{\boldsymbol\delta}}\):

rows <- rownames(adrep) == "delta"

delta <- adrep[rows, "Estimate"]

delta_std_error <- adrep[rows, "Std. Error"]

delta

## delta delta

## 0.340542 0.659458

delta_std_error

## delta delta

## 0.2305644 0.23056445.2 Likelihood profile based

confidence intervals

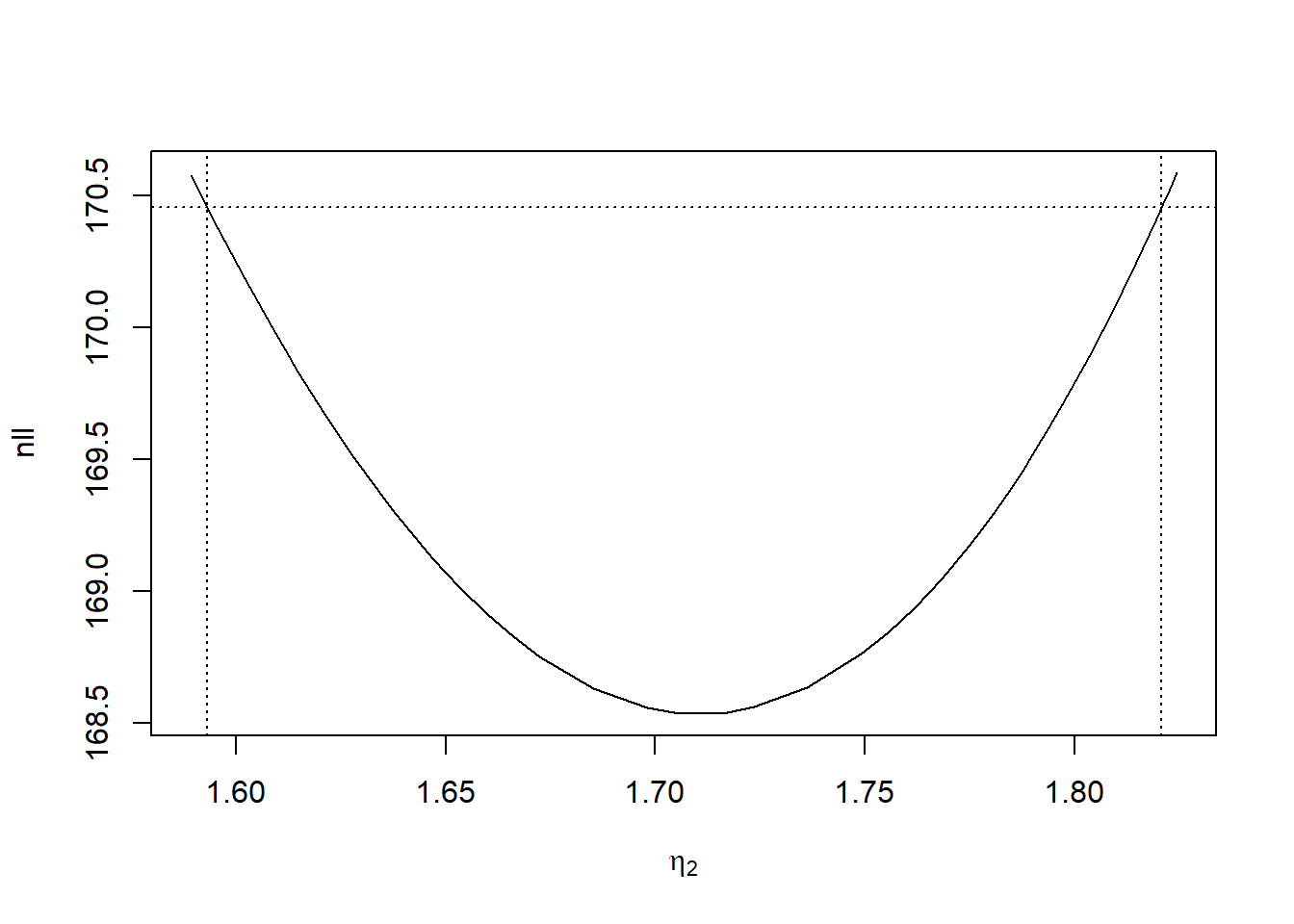

Our nll function is parametrized in terms of and optimized with respect to the working parameters. In practice, this aspect is easy to deal with. Once a profile CI for the working parameter (here \(\eta_2\)) has been obtained following the procedure above, the corresponding CI for the natural parameter \(\lambda_2\) results directly from transforming the upper and lower boundary of the CI for \(\eta_2\) by the one-to-one transformation \(\lambda_2 = \exp(\eta_2)\). For further details on the invariance of likelihood-based CIs to parameter transformations, we refer to Meeker and Escobar (1995).

Profiling \(\eta_2\) (the working parameter corresponding to \(\lambda_2\)) with TMB can be done with

profile <- tmbprofile(obj = obj_tmb,

name = 2,

trace = FALSE)

head(profile)

## tlambda value

## 67 1.589247 170.5801

## 66 1.592447 170.4783

## 65 1.595647 170.3790

## 64 1.598847 170.2821

## 63 1.602047 170.1877

## 62 1.605247 170.0957A plot allows for a visual representation of the profile

# par(mgp = c(2, 0.5, 0), mar = c(3, 3, 2.5, 1),

# cex.lab = 1.5)

plot(profile, level = 0.95,

xlab = expression(eta[2]),

ylab = "nll")

Then we can infer \(\eta_2\)’s confidence interval, and hence \(\lambda_2\)’s confidence interval

confint(profile)

## lower upper

## tlambda 1.593141 1.820641

exp(confint(profile))

## lower upper

## tlambda 4.919178 6.175815Further, profiling the TPM is done similarly. However, since individual natural TPM parameters cannot be deduced from single working parameters, we need to profile the entire working TPM then transform it back to a natural TPM.

profile3 <- tmbprofile(obj = obj_tmb,

name = 3,

trace = FALSE)

profile4 <- tmbprofile(obj = obj_tmb,

name = 4,

trace = FALSE)

# Obtain confidence intervals for working parameters

tgamma_3_confint <- confint(profile3)

tgamma_4_confint <- confint(profile4)

# Group lower bounds and upper bounds

lower <- c(tgamma_3_confint[1], tgamma_4_confint[1])

upper <- c(tgamma_3_confint[2], tgamma_4_confint[2])

# Infer bounds on natural parameters

gamma_1 <- gamma.w2n(m, lower)

gamma_2 <- gamma.w2n(m, upper)

# Display unsorted lower and upper bounds

gamma_1

## [,1] [,2]

## [1,] 0.99800287 0.001997133

## [2,] 0.00154545 0.998454550

gamma_2

## [,1] [,2]

## [1,] 0.81653046 0.1834695

## [2,] 0.08801416 0.9119858

# Sorted confidence interval for gamma_11

sort(c(gamma_1[1, 1], gamma_2[1, 1]))

## [1] 0.8165305 0.9980029It is noteworthy that gamma_1 is not necessarily lower than gamma_2, because only the working confidence intervals are automatically sorted.

Also note that linear combinations of parameters can be profiled by passing the lincomb argument.

More details are available by executing ??TMB::tmbprofile to access the tmbprofile’s help.

The name parameter should be the index of a parameter to profile.

While the function’s help mentions it can be a parameter’s name, the first two parameters are both named tlambda as can be seen here

mod_tmb$par

## tlambda tlambda tgamma tgamma

## 0.4925054 1.7107475 -3.6263979 -2.94028035.3 Bootstrap-based

confidence intervals

5.3.1 Generating data

In order to perform a parametric bootstrap, we need to be able to generate data from a set of parameters.

For readability and code maintenance, it is conventional to store procedures that will be used more than once into functions.

The data-generating function is defined in Zucchini, MacDonald, and Langrock (2016, secs. A.1.5 p.333).

pois.HMM.generate.sample <- function(ns,mod) {

mvect <- 1:mod$m

state <- numeric(ns)

state[1] <- sample(mvect, 1, prob = mod$delta)

for (i in 2:ns) {

state[i] <- sample(mvect, 1, prob = mod$gamma[state[i - 1], ])

}

x <- rpois(ns, lambda = mod$lambda[state])

return(x)

}Further, one can retrieve the state sequence used to generate the data by performing an adjustment:

# Generate a random sample from a HMM

pois.HMM.generate.sample <- function(ns, mod) {

mvect <- 1:mod$m

state <- numeric(ns)

state[1] <- sample(mvect, 1, prob = mod$delta)

for (i in 2:ns) {

state[i] <- sample(mvect, 1, prob = mod$gamma[state[i - 1], ])

}

x <- rpois(ns, lambda = mod$lambda[state])

return(list(data = x, state = state))

}The results are returned in a list to simplify usage, as the intuitive way (c(x, state)) would append the state sequence to the data.

In practice, HMMs sometimes cannot be estimated on generated samples.

To deal with this, we can generate a new sample as long as HMMs cannot be estimated on it, with the help of this more resilient function which can easily be adapted to different needs.

Natural parameter estimates are returned for convenience.

The argument test_marqLevAlg decides if convergence of marqLevAlg is required.

The argument std_error decides if standard errors are returned along with TMB’s estimates.

This function relies on the custom function TMB.estimate which is a wrapper of nlminb made for Poisson HMM estimation via TMB.

# Generate a random sample from a HMM

pois.HMM.generate.estimable.sample <- function(ns,

mod,

testing_params = mod,

params_names = PARAMS_NAMES,

return_std_error = FALSE,

return_the_MSE_estimators = FALSE,

control_args = CONTROL_ARGS,

debug_message = "") {

if(anyNA(c(ns, mod, testing_params))) {

stop("Some parameters are NA in pois.HMM.generate.estimable.sample")

}

# Count occurrences for each error

problems <- c("state_number" = 0,

"BFGS_null" = 0,

"BFGS_convergence_failures" = 0,

"BFGS_gr_null" = 0,

"BFGS_gr_convergence_failures" = 0,

"L_BFGS_B_null" = 0,

"L_BFGS_B_convergence_failures" = 0,

"L_BFGS_B_gr_null" = 0,

"L_BFGS_B_gr_convergence_failures" = 0,

"CG_null" = 0,

"CG_convergence_failures" = 0,

"CG_gr_null" = 0,

"CG_gr_convergence_failures" = 0,

"Nelder_Mead_null" = 0,

"Nelder_Mead_convergence_failures" = 0,

"nlm_null" = 0,

"nlm_convergence_failures" = 0,

"nlm_gr_null" = 0,

"nlm_gr_convergence_failures" = 0,

"nlm_he_null" = 0,

"nlm_he_convergence_failures" = 0,

"nlm_grhe_null" = 0,

"nlm_grhe_convergence_failures" = 0,

"nlminb_null" = 0,

"nlminb_convergence_failures" = 0,

"nlminb_gr_null" = 0,

"nlminb_gr_convergence_failures" = 0,

"nlminb_he_null" = 0,

"nlminb_he_convergence_failures" = 0,

"nlminb_grhe_null" = 0,

"nlminb_grhe_convergence_failures" = 0,

"hjn_null" = 0,

"hjn_convergence_failures" = 0,

"marqLevAlg_null" = 0,

"marqLevAlg_convergence_failures" = 0,

"marqLevAlg_gr_null" = 0,

"marqLevAlg_gr_convergence_failures" = 0,

"marqLevAlg_he_null" = 0,

"marqLevAlg_he_convergence_failures" = 0,

"marqLevAlg_grhe_null" = 0,

"marqLevAlg_grhe_convergence_failures" = 0,

# "ucminf_null" = 0,

# "ucminf_convergence_failures" = 0,

"newuoa_null" = 0,

"newuoa_convergence_failures" = 0,

"BBoptim_null" = 0,

"BBoptim_convergence_failures" = 0,

# "BBoptim_gr_null" = 0,

# "BBoptim_gr_convergence_failures" = 0,

"NA_value" = 0)

m <- mod$m

# Loop as long as there is an issue with nlminb, and prevent messages

# sink(nullfile())

# sink("log.txt")

repeat {

#simulate the data

new_data <- pois.HMM.generate.sample(ns = ns,

mod = mod)

# If the number of states generated is different from m, discard the data

if (length(unique(new_data$state)) != m) {

problems["state_number"] <- problems["state_number"] + 1

next

}

TMB_new_data <- list(x = new_data$data,

m = m)

if (return_the_MSE_estimators == TRUE) {

TMB_new_data$true_lambda <- mod$lambda

TMB_new_data$true_gamma <- mod$gamma

TMB_new_data$true_delta <- mod$delta

}

testing_w_params <- pois.HMM.pn2pw(m = m,

lambda = testing_params$lambda,

gamma = testing_params$gamma,

delta = testing_params$delta)

# If at least an optimizer shows an issue, regenerate a sample

problem <- FALSE

# Test BFGS

result_BFGS <- TMB.estimate(TMB_data = TMB_new_data,

working_parameters = testing_w_params,

std_error = return_std_error,

return_MSE_estimators = return_the_MSE_estimators,

optimizer = "BFGS",

debug_message = paste(debug_message, "> pois.HMM.generate.estimable.sample"))

if (is.null(result_BFGS)) {

problems["BFGS_error"] <- problems["BFGS_error"] + 1

problem <- TRUE

} else if (result_BFGS$convergence == FALSE) {

problems["BFGS_convergence_failures"] <- problems["BFGS_convergence_failures"] + 1

problem <- TRUE

}

# Test BFGS with gradient

result_BFGS_gr <- TMB.estimate(TMB_data = TMB_new_data,

working_parameters = testing_w_params,

gradient = TRUE,

std_error = return_std_error,

return_MSE_estimators = return_the_MSE_estimators,

optimizer = "BFGS",

debug_message = paste(debug_message, "> pois.HMM.generate.estimable.sample"))

if (is.null(result_BFGS_gr)) {

problems["BFGS_gr_error"] <- problems["BFGS_gr_error"] + 1

problem <- TRUE

} else if (result_BFGS_gr$convergence == FALSE) {

problems["BFGS_gr_convergence_failures"] <- problems["BFGS_gr_convergence_failures"] + 1

problem <- TRUE

}

# Test L-BFGS-B

result_L_BFGS_B <- TMB.estimate(TMB_data = TMB_new_data,

working_parameters = testing_w_params,

std_error = return_std_error,

return_MSE_estimators = return_the_MSE_estimators,

optimizer = "L-BFGS-B",

debug_message = paste(debug_message, "> pois.HMM.generate.estimable.sample"))

if (is.null(result_L_BFGS_B)) {

problems["L_BFGS_B_error"] <- problems["L_BFGS_B_error"] + 1

problem <- TRUE

} else if (result_L_BFGS_B$convergence == FALSE) {

problems["L_BFGS_B_convergence_failures"] <- problems["L_BFGS_B_convergence_failures"] + 1

problem <- TRUE

}

# Test L-BFGS-B with gradient

result_L_BFGS_B_gr <- TMB.estimate(TMB_data = TMB_new_data,

working_parameters = testing_w_params,

gradient = TRUE,

std_error = return_std_error,

return_MSE_estimators = return_the_MSE_estimators,

optimizer = "L-BFGS-B",

debug_message = paste(debug_message, "> pois.HMM.generate.estimable.sample"))

if (is.null(result_L_BFGS_B_gr)) {

problems["L_BFGS_B_gr_error"] <- problems["L_BFGS_B_gr_error"] + 1

problem <- TRUE

} else if (result_L_BFGS_B_gr$convergence == FALSE) {

problems["L_BFGS_B_gr_convergence_failures"] <- problems["L_BFGS_B_gr_convergence_failures"] + 1

problem <- TRUE

}

# Test CG

result_CG <- TMB.estimate(TMB_data = TMB_new_data,

working_parameters = testing_w_params,

std_error = return_std_error,

return_MSE_estimators = return_the_MSE_estimators,

optimizer = "CG",

debug_message = paste(debug_message, "> pois.HMM.generate.estimable.sample"))

if (is.null(result_CG)) {

problems["CG_error"] <- problems["CG_error"] + 1

problem <- TRUE

} else if (result_CG$convergence == FALSE) {

problems["CG_convergence_failures"] <- problems["CG_convergence_failures"] + 1

problem <- TRUE

}

# Test CG with gradient

result_CG_gr <- TMB.estimate(TMB_data = TMB_new_data,

working_parameters = testing_w_params,

gradient = TRUE,

std_error = return_std_error,

return_MSE_estimators = return_the_MSE_estimators,

optimizer = "CG",

debug_message = paste(debug_message, "> pois.HMM.generate.estimable.sample"))

if (is.null(result_CG_gr)) {

problems["CG_gr_error"] <- problems["CG_gr_error"] + 1

problem <- TRUE

} else if (result_CG_gr$convergence == FALSE) {

problems["CG_gr_convergence_failures"] <- problems["CG_gr_convergence_failures"] + 1

problem <- TRUE

}

# Test Nelder-Mead

result_Nelder_Mead <- TMB.estimate(TMB_data = TMB_new_data,

working_parameters = testing_w_params,

std_error = return_std_error,

return_MSE_estimators = return_the_MSE_estimators,

optimizer = "Nelder-Mead",

debug_message = paste(debug_message, "> pois.HMM.generate.estimable.sample"))

if (is.null(result_Nelder_Mead)) {

problems["Nelder_Mead_error"] <- problems["Nelder_Mead_error"] + 1

problem <- TRUE

} else if (result_Nelder_Mead$convergence == FALSE) {

problems["Nelder_Mead_convergence_failures"] <- problems["Nelder_Mead_convergence_failures"] + 1

problem <- TRUE

}

# Test nlm

result_nlm <- TMB.estimate(TMB_data = TMB_new_data,

working_parameters = testing_w_params,

std_error = return_std_error,

return_MSE_estimators = return_the_MSE_estimators,

optimizer = "nlm",

debug_message = paste(debug_message, "> pois.HMM.generate.estimable.sample"))

if (is.null(result_nlm)) {

problems["nlm_error"] <- problems["nlm_error"] + 1

problem <- TRUE

} else if (result_nlm$convergence == FALSE) {

problems["nlm_convergence_failures"] <- problems["nlm_convergence_failures"] + 1

problem <- TRUE

}

# Test nlm with gradient

result_nlm_gr <- TMB.estimate(TMB_data = TMB_new_data,

working_parameters = testing_w_params,

gradient = TRUE,

std_error = return_std_error,

return_MSE_estimators = return_the_MSE_estimators,

optimizer = "nlm",

debug_message = paste(debug_message, "> pois.HMM.generate.estimable.sample"))

if (is.null(result_nlm_gr)) {

problems["nlm_gr_error"] <- problems["nlm_gr_error"] + 1

problem <- TRUE

} else if (result_nlm_gr$convergence == FALSE) {

problems["nlm_gr_convergence_failures"] <- problems["nlm_gr_convergence_failures"] + 1

problem <- TRUE

}

# Test nlm with hessian

result_nlm_he <- TMB.estimate(TMB_data = TMB_new_data,

working_parameters = testing_w_params,

hessian = TRUE,

std_error = return_std_error,

return_MSE_estimators = return_the_MSE_estimators,

optimizer = "nlm",

debug_message = paste(debug_message, "> pois.HMM.generate.estimable.sample"))

if (is.null(result_nlm_he)) {

problems["nlm_he_error"] <- problems["nlm_he_error"] + 1

problem <- TRUE

} else if (result_nlm_he$convergence == FALSE) {

problems["nlm_he_convergence_failures"] <- problems["nlm_he_convergence_failures"] + 1

problem <- TRUE

}

# Test nlm with gradient and hessian

result_nlm_grhe <- TMB.estimate(TMB_data = TMB_new_data,

working_parameters = testing_w_params,

gradient = TRUE,

hessian = TRUE,

std_error = return_std_error,

return_MSE_estimators = return_the_MSE_estimators,

optimizer = "nlm",

debug_message = paste(debug_message, "> pois.HMM.generate.estimable.sample"))

if (is.null(result_nlm_grhe)) {

problems["nlm_grhe_error"] <- problems["nlm_grhe_error"] + 1

problem <- TRUE

} else if (result_nlm_grhe$convergence == FALSE) {

problems["nlm_grhe_convergence_failures"] <- problems["nlm_grhe_convergence_failures"] + 1

problem <- TRUE

}

# Test nlminb

result_nlminb <- TMB.estimate(TMB_data = TMB_new_data,

working_parameters = testing_w_params,

std_error = return_std_error,

return_MSE_estimators = return_the_MSE_estimators,

optimizer = "nlminb",

debug_message = paste(debug_message, "> pois.HMM.generate.estimable.sample"))

if (is.null(result_nlminb)) {

problems["nlminb_error"] <- problems["nlminb_error"] + 1

problem <- TRUE

} else if (result_nlminb$convergence == FALSE) {

problems["nlminb_convergence_failures"] <- problems["nlminb_convergence_failures"] + 1

problem <- TRUE

}

# Test nlminb with gradient

result_nlminb_gr <- TMB.estimate(TMB_data = TMB_new_data,

working_parameters = testing_w_params,

gradient = TRUE,

std_error = return_std_error,

return_MSE_estimators = return_the_MSE_estimators,

optimizer = "nlminb",

debug_message = paste(debug_message, "> pois.HMM.generate.estimable.sample"))

if (is.null(result_nlminb_gr)) {

problems["nlminb_gr_error"] <- problems["nlminb_gr_error"] + 1

problem <- TRUE

} else if (result_nlminb_gr$convergence == FALSE) {

problems["nlminb_gr_convergence_failures"] <- problems["nlminb_gr_convergence_failures"] + 1

problem <- TRUE

}

# Test nlminb with hessian

result_nlminb_he <- TMB.estimate(TMB_data = TMB_new_data,

working_parameters = testing_w_params,

hessian = TRUE,

std_error = return_std_error,

return_MSE_estimators = return_the_MSE_estimators,

optimizer = "nlminb",

debug_message = paste(debug_message, "> pois.HMM.generate.estimable.sample"))

if (is.null(result_nlminb_he)) {

problems["nlminb_he_error"] <- problems["nlminb_he_error"] + 1

problem <- TRUE

} else if (result_nlminb_he$convergence == FALSE) {

problems["nlminb_he_convergence_failures"] <- problems["nlminb_he_convergence_failures"] + 1

problem <- TRUE

}

# Test nlminb with gradient and hessian

result_nlminb_grhe <- TMB.estimate(TMB_data = TMB_new_data,

working_parameters = testing_w_params,

gradient = TRUE,

hessian = TRUE,

std_error = return_std_error,

return_MSE_estimators = return_the_MSE_estimators,

optimizer = "nlminb",

debug_message = paste(debug_message, "> pois.HMM.generate.estimable.sample"))

if (is.null(result_nlminb_grhe)) {

problems["nlminb_grhe_error"] <- problems["nlminb_grhe_error"] + 1

problem <- TRUE

} else if (result_nlminb_grhe$convergence == FALSE) {

problems["nlminb_grhe_convergence_failures"] <- problems["nlminb_grhe_convergence_failures"] + 1

problem <- TRUE

}

# Test hjn

result_hjn <- TMB.estimate(TMB_data = TMB_new_data,

working_parameters = testing_w_params,

std_error = return_std_error,

return_MSE_estimators = return_the_MSE_estimators,

optimizer = "hjn",

debug_message = paste(debug_message, "> pois.HMM.generate.estimable.sample"))

if (is.null(result_hjn)) {

problems["hjn_error"] <- problems["hjn_error"] + 1

problem <- TRUE

} else if (result_hjn$convergence == FALSE) {

problems["hjn_convergence_failures"] <- problems["hjn_convergence_failures"] + 1

problem <- TRUE

}

# Test marqLevAlg

result_marqLevAlg <- TMB.estimate(TMB_data = TMB_new_data,

working_parameters = testing_w_params,

std_error = return_std_error,

return_MSE_estimators = return_the_MSE_estimators,

optimizer = "marqLevAlg",

debug_message = paste(debug_message, "> pois.HMM.generate.estimable.sample"))

if (is.null(result_marqLevAlg)) {

problems["marqLevAlg_error"] <- problems["marqLevAlg_error"] + 1

problem <- TRUE

} else if (result_marqLevAlg$convergence == FALSE) {

problems["marqLevAlg_convergence_failures"] <- problems["marqLevAlg_convergence_failures"] + 1

problem <- TRUE

}

# Test marqLevAlg with gradient

result_marqLevAlg_gr <- TMB.estimate(TMB_data = TMB_new_data,

working_parameters = testing_w_params,

gradient = TRUE,

std_error = return_std_error,

return_MSE_estimators = return_the_MSE_estimators,

optimizer = "marqLevAlg",

debug_message = paste(debug_message, "> pois.HMM.generate.estimable.sample"))

if (is.null(result_marqLevAlg_gr)) {

problems["marqLevAlg_gr_error"] <- problems["marqLevAlg_gr_error"] + 1

problem <- TRUE

} else if (result_marqLevAlg_gr$convergence == FALSE) {

problems["marqLevAlg_gr_convergence_failures"] <- problems["marqLevAlg_gr_convergence_failures"] + 1

problem <- TRUE

}

# Test marqLevAlg with hessian

result_marqLevAlg_he <- TMB.estimate(TMB_data = TMB_new_data,

working_parameters = testing_w_params,

hessian = TRUE,

std_error = return_std_error,

return_MSE_estimators = return_the_MSE_estimators,

optimizer = "marqLevAlg",

debug_message = paste(debug_message, "> pois.HMM.generate.estimable.sample"))

if (is.null(result_marqLevAlg_he)) {

problems["marqLevAlg_he_error"] <- problems["marqLevAlg_he_error"] + 1

problem <- TRUE

} else if (result_marqLevAlg_he$convergence == FALSE) {

problems["marqLevAlg_he_convergence_failures"] <- problems["marqLevAlg_he_convergence_failures"] + 1

problem <- TRUE

}

# Test marqLevAlg with gradient and hessian

result_marqLevAlg_grhe <- TMB.estimate(TMB_data = TMB_new_data,

working_parameters = testing_w_params,

gradient = TRUE,

hessian = TRUE,

std_error = return_std_error,

return_MSE_estimators = return_the_MSE_estimators,

optimizer = "marqLevAlg",

debug_message = paste(debug_message, "> pois.HMM.generate.estimable.sample"))

if (is.null(result_marqLevAlg_grhe)) {

problems["marqLevAlg_grhe_error"] <- problems["marqLevAlg_grhe_error"] + 1

problem <- TRUE

} else if (result_marqLevAlg_grhe$convergence == FALSE) {

problems["marqLevAlg_grhe_convergence_failures"] <- problems["marqLevAlg_grhe_convergence_failures"] + 1

problem <- TRUE

}

# # Test ucminf

# result_ucminf <- TMB.estimate(TMB_data = TMB_new_data,

# working_parameters = testing_w_params,

# gradient = TRUE,

# hessian = TRUE,

# std_error = return_std_error,

# return_MSE_estimators = return_the_MSE_estimators,

# optimizer = "ucminf",

# debug_message = paste(debug_message, "> pois.HMM.generate.estimable.sample"))

# if (is.null(result_ucminf)) {

# problems["ucminf_error"] <- problems["ucminf_error"] + 1

# problem <- TRUE

# } else if (result_ucminf$convergence == FALSE) {

# problems["ucminf_convergence_failures"] <- problems["ucminf_convergence_failures"] + 1

# problem <- TRUE

# }

# Test newuoa

result_newuoa <- TMB.estimate(TMB_data = TMB_new_data,

working_parameters = testing_w_params,

std_error = return_std_error,

return_MSE_estimators = return_the_MSE_estimators,

optimizer = "newuoa",

debug_message = paste(debug_message, "> pois.HMM.generate.estimable.sample"))

if (is.null(result_newuoa)) {

problems["newuoa_error"] <- problems["newuoa_error"] + 1

problem <- TRUE

} else if (result_newuoa$convergence == FALSE) {

problems["newuoa_convergence_failures"] <- problems["newuoa_convergence_failures"] + 1

problem <- TRUE

}

# Test BBoptim

result_BBoptim <- TMB.estimate(TMB_data = TMB_new_data,

working_parameters = testing_w_params,

std_error = return_std_error,

return_MSE_estimators = return_the_MSE_estimators,

optimizer = "BBoptim",

debug_message = paste(debug_message, "> pois.HMM.generate.estimable.sample"))

if (is.null(result_BBoptim)) {

problems["BBoptim_error"] <- problems["BBoptim_error"] + 1

problem <- TRUE

} else if (result_BBoptim$convergence == FALSE) {

problems["BBoptim_convergence_failures"] <- problems["BBoptim_convergence_failures"] + 1

problem <- TRUE

}

# # Test BBoptim with gradient

# result_BBoptim_gr <- TMB.estimate(TMB_data = TMB_new_data,

# working_parameters = testing_w_params,

# gradient = TRUE,

# std_error = return_std_error,

# return_MSE_estimators = return_the_MSE_estimators,

# optimizer = "BBoptim",

# debug_message = paste(debug_message, "> pois.HMM.generate.estimable.sample"))

# if (is.null(result_BBoptim_gr)) {

# problems["BBoptim_gr_error"] <- problems["BBoptim_gr_error"] + 1

# problem <- TRUE

# } else if (result_BBoptim_gr$convergence == FALSE) {

# problems["BBoptim_gr_convergence_failures"] <- problems["BBoptim_gr_convergence_failures"] + 1

# problem <- TRUE

# }

# Retrieve nlminb's parameters

natural_parameters <- list(m = m,

lambda = result_nlminb$lambda,

gamma = result_nlminb$gamma,

delta = result_nlminb$delta,

lambda_std_error = result_nlminb$lambda_std_error,

gamma_std_error = result_nlminb$gamma_std_error,

delta_std_error = result_nlminb$delta_std_error)

# If some parameters are NA for some reason, discard the data

if (anyNA(natural_parameters[params_names], recursive = TRUE)) {

problems["NA_value"] <- problems["NA_value"] + 1

problem <- TRUE

}

# If everything went well, end the "repeat" loop

# Otherwise, the loop continues

if (problem == FALSE) {

break

}

}

#sink()

# Retrieve negative log-likelihoods across all optimizers

all_nlls <- data.frame("BFGS" = result_BFGS$nll,

"BFGS_gr" = result_BFGS_gr$nll,

"L_BFGS_B" = result_L_BFGS_B$nll,

"L_BFGS_B_gr" = result_L_BFGS_B_gr$nll,

"CG" = result_CG$nll,

"CG_gr" = result_CG_gr$nll,

"Nelder_Mead" = result_Nelder_Mead$nll,

"nlm" = result_nlm$nll,

"nlm_gr" = result_nlm_gr$nll,

"nlm_he" = result_nlm_he$nll,

"nlm_grhe" = result_nlm_grhe$nll,

"nlminb" = result_nlminb$nll,

"nlminb_gr" = result_nlminb_gr$nll,

"nlminb_he" = result_nlminb_he$nll,

"nlminb_grhe" = result_nlminb_grhe$nll,

"hjn" = result_hjn$nll,

"marqLevAlg" = result_marqLevAlg$nll,

"marqLevAlg_gr" = result_marqLevAlg_gr$nll,

"marqLevAlg_he" = result_marqLevAlg_he$nll,

"marqLevAlg_grhe" = result_marqLevAlg_grhe$nll,

# "ucminf" = result_ucminf$nll,

"newuoa" = result_newuoa$nll,

"BBoptim" = result_BBoptim$nll)

# "BBoptim_gr" = result_BBoptim_gr$nll)

rownames_mles <- c(paste0("lambda", 1:m), paste0("gamma", 1:(m ^ 2)), paste0("delta", 1:m))

if (return_the_MSE_estimators == TRUE) {

all_MSE_estimators <- data.frame("BFGS" = result_BFGS$MSE_estimators,

"BFGS_gr" = result_BFGS_gr$MSE_estimators,

"L_BFGS_B" = result_L_BFGS_B$MSE_estimators,

"L_BFGS_B_gr" = result_L_BFGS_B_gr$MSE_estimators,

"CG" = result_CG$MSE_estimators,

"CG_gr" = result_CG_gr$MSE_estimators,

"Nelder_Mead" = result_Nelder_Mead$MSE_estimators,

"nlm" = result_nlm$MSE_estimators,

"nlm_gr" = result_nlm_gr$MSE_estimators,

"nlm_he" = result_nlm_he$MSE_estimators,

"nlm_grhe" = result_nlm_grhe$MSE_estimators,

"nlminb" = result_nlminb$MSE_estimators,

"nlminb_gr" = result_nlminb_gr$MSE_estimators,

"nlminb_he" = result_nlminb_he$MSE_estimators,

"nlminb_grhe" = result_nlminb_grhe$MSE_estimators,

"hjn" = result_hjn$MSE_estimators,

"marqLevAlg" = result_marqLevAlg$MSE_estimators,

"marqLevAlg_gr" = result_marqLevAlg_gr$MSE_estimators,

"marqLevAlg_he" = result_marqLevAlg_he$MSE_estimators,

"marqLevAlg_grhe" = result_marqLevAlg_grhe$MSE_estimators,

# "ucminf" = result_ucminf$MSE_estimators,

"newuoa" = result_newuoa$MSE_estimators,

"BBoptim" = result_BBoptim$MSE_estimators)

# "BBoptim_gr" = result_BBoptim_gr$MSE_estimators)

}

all_natural_parameters <- data.frame("m" = m,

"Parameter" = rownames_mles,

"BFGS" = unlist(result_BFGS[params_names]),

"BFGS_gr" = unlist(result_BFGS_gr[params_names]),

"L_BFGS_B" = unlist(result_L_BFGS_B[params_names]),

"L_BFGS_B_gr" = unlist(result_L_BFGS_B_gr[params_names]),

"CG" = unlist(result_CG[params_names]),

"CG_gr" = unlist(result_CG_gr[params_names]),

"Nelder_Mead" = unlist(result_Nelder_Mead[params_names]),

"nlm" = unlist(result_nlm[params_names]),

"nlm_gr" = unlist(result_nlm_gr[params_names]),

"nlm_he" = unlist(result_nlm_he[params_names]),

"nlm_grhe" = unlist(result_nlm_grhe[params_names]),

"nlminb" = unlist(result_nlminb[params_names]),

"nlminb_gr" = unlist(result_nlminb_gr[params_names]),

"nlminb_he" = unlist(result_nlminb_he[params_names]),

"nlminb_grhe" = unlist(result_nlminb_grhe[params_names]),

"hjn" = unlist(result_hjn[params_names]),

"marqLevAlg" = unlist(result_marqLevAlg[params_names]),

"marqLevAlg_gr" = unlist(result_marqLevAlg_gr[params_names]),

"marqLevAlg_he" = unlist(result_marqLevAlg_he[params_names]),

"marqLevAlg_grhe" = unlist(result_marqLevAlg_grhe[params_names]),

# "ucminf" = unlist(result_ucminf[params_names]),

"newuoa" = unlist(result_newuoa[params_names]),

"BBoptim" = unlist(result_BBoptim[params_names]))

# "BBoptim_gr" = unlist(result_BBoptim_gr[params_names]))

row.names(all_natural_parameters) <- NULL

all_natural_parameters_list <- list("BFGS" = result_BFGS[params_names],

"BFGS_gr" = result_BFGS_gr[params_names],

"L_BFGS_B" = result_L_BFGS_B[params_names],

"L_BFGS_B_gr" = result_L_BFGS_B_gr[params_names],

"CG" = result_CG[params_names],

"CG_gr" = result_CG_gr[params_names],

"Nelder_Mead" = result_Nelder_Mead[params_names],

"nlm" = result_nlm[params_names],

"nlm_gr" = result_nlm_gr[params_names],

"nlm_he" = result_nlm_he[params_names],

"nlm_grhe" = result_nlm_grhe[params_names],

"nlminb" = result_nlminb[params_names],

"nlminb_gr" = result_nlminb_gr[params_names],

"nlminb_he" = result_nlminb_he[params_names],

"nlminb_grhe" = result_nlminb_grhe[params_names],

"hjn" = result_hjn[params_names],

"marqLevAlg" = result_marqLevAlg[params_names],

"marqLevAlg_gr" = result_marqLevAlg_gr[params_names],

"marqLevAlg_he" = result_marqLevAlg_he[params_names],

"marqLevAlg_grhe" = result_marqLevAlg_grhe[params_names],

# "ucminf" = result_ucminf[params_names],

"newuoa" = result_newuoa[params_names],

"BBoptim" = result_BBoptim[params_names])

# "BBoptim_gr" = result_BBoptim_gr[params_names])

all_iterations <- list("BFGS" = result_BFGS$iterations,

"BFGS_gr" = result_BFGS_gr$iterations,

"L_BFGS_B" = result_L_BFGS_B$iterations,

"L_BFGS_B_gr" = result_L_BFGS_B_gr$iterations,

"CG" = result_CG$iterations,

"CG_gr" = result_CG_gr$iterations,

"Nelder_Mead" = result_Nelder_Mead$iterations,

"nlm" = result_nlm$iterations,

"nlm_gr" = result_nlm_gr$iterations,

"nlm_he" = result_nlm_he$iterations,

"nlm_grhe" = result_nlm_grhe$iterations,

"nlminb" = result_nlminb$iterations,

"nlminb_gr" = result_nlminb_gr$iterations,

"nlminb_he" = result_nlminb_he$iterations,

"nlminb_grhe" = result_nlminb_grhe$iterations,

"hjn" = result_hjn$iterations,

"marqLevAlg" = result_marqLevAlg$iterations,

"marqLevAlg_gr" = result_marqLevAlg_gr$iterations,

"marqLevAlg_he" = result_marqLevAlg_he$iterations,

"marqLevAlg_grhe" = result_marqLevAlg_grhe$iterations,

# "ucminf" = iterations,

"newuoa" = result_newuoa$iterations,

"BBoptim" = result_BBoptim$iterations)

if (return_std_error == TRUE) {

params_names <- c(params_names, "nll")

rownames_mles <- c(rownames_mles, "nll")

params_names <- paste0(params_names, "_std_error")

rownames_mles <- paste0(rownames_mles, "_std_error")

all_std_errors <- data.frame("m" = m,

"Parameter" = rownames_mles,

"BFGS" = unlist(result_BFGS[params_names]),

"BFGS_gr" = unlist(result_BFGS_gr[params_names]),

"L_BFGS_B" = unlist(result_L_BFGS_B[params_names]),

"L_BFGS_B_gr" = unlist(result_L_BFGS_B_gr[params_names]),

"CG" = unlist(result_CG[params_names]),

"CG_gr" = unlist(result_CG_gr[params_names]),

"Nelder_Mead" = unlist(result_Nelder_Mead[params_names]),

"nlm" = unlist(result_nlm[params_names]),

"nlm_gr" = unlist(result_nlm_gr[params_names]),

"nlm_he" = unlist(result_nlm_he[params_names]),

"nlm_grhe" = unlist(result_nlm_grhe[params_names]),

"nlminb" = unlist(result_nlminb[params_names]),

"nlminb_gr" = unlist(result_nlminb_gr[params_names]),

"nlminb_he" = unlist(result_nlminb_he[params_names]),

"nlminb_grhe" = unlist(result_nlminb_grhe[params_names]),

"hjn" = unlist(result_hjn[params_names]),

"marqLevAlg" = unlist(result_marqLevAlg[params_names]),

"marqLevAlg_gr" = unlist(result_marqLevAlg_gr[params_names]),

"marqLevAlg_he" = unlist(result_marqLevAlg_he[params_names]),

"marqLevAlg_grhe" = unlist(result_marqLevAlg_grhe[params_names]),

# "ucminf" = unlist(result_ucminf[params_names]),

"newuoa" = unlist(result_newuoa[params_names]),

"BBoptim" = unlist(result_BBoptim[params_names]))

# "BBoptim_gr" = unlist(result_BBoptim_gr[params_names]))

row.names(all_std_errors) <- NULL

}

result <- list(data = new_data$data,

states = new_data$state,

natural_parameters = natural_parameters,

all_natural_parameters = all_natural_parameters,

all_natural_parameters_list = all_natural_parameters_list,

all_nlls = all_nlls,

all_iterations = all_iterations,

problems = problems)

# Return all MSEs derived from estimates

if (return_the_MSE_estimators == TRUE) {

result$all_MSE_estimators <- all_MSE_estimators

}

# Return all std errors

if (return_std_error == TRUE) {

result$all_std_errors <- all_std_errors

}

return(result)

}5.3.2 Bootstrap

set.seed(123)

library(TMB)

TMB::compile("code/poi_hmm.cpp")

dyn.load(dynlib("code/poi_hmm"))

source("functions/utils.R")

m <- 2

load("data/tinnitus.RData")

TMB_data <- list(x = tinn_data, m = m)

# Initial set of parameters

lambda_init <- c(1, 3)

gamma_init <- matrix(c(0.8, 0.2,

0.2, 0.8), byrow = TRUE, nrow = m)

# Turn them into working parameters

parameters <- pois.HMM.pn2pw(m, lambda_init, gamma_init)

# Build the TMB object

obj_tmb <- MakeADFun(TMB_data, parameters,

DLL = "poi_hmm", silent = TRUE)

# Optimize

mod_tmb <- nlminb(start = obj_tmb$par,

objective = obj_tmb$fn,

gradient = obj_tmb$gr,

hessian = obj_tmb$he)

# Bootstrap procedure

bootstrap_estimates <- data.frame()

DATA_SIZE <- length(tinn_data)

# Set how many parametric bootstrap samples we create

BOOTSTRAP_SAMPLES <- 10

# MLE

ML_working_estimates <- obj_tmb$par

ML_natural_estimates <- obj_tmb$report(ML_working_estimates)

lambda <- ML_natural_estimates$lambda

gamma <- ML_natural_estimates$gamma

delta <- ML_natural_estimates$delta

PARAMS_NAMES <- c("lambda", "gamma", "delta")

for (idx_sample in 1:BOOTSTRAP_SAMPLES) {

# Generate a sample based on mod, and ensure a HMM can be estimated on it

# with testing_params as initial parameters

temp <- pois.HMM.generate.estimable.sample(ns = DATA_SIZE,

mod = list(m = m,

lambda = lambda,

gamma = gamma),

testing_params = list(m = m,

lambda = lambda_init,

gamma = gamma_init))$natural_parameters

# The values from gamma are taken columnwise

natural_parameters <- unlist(temp[PARAMS_NAMES])

len_par <- length(natural_parameters)

bootstrap_estimates[idx_sample, 1:len_par] <- natural_parameters

}

# Lower and upper (2.5% and 97.5%) bounds

q <- apply(bootstrap_estimates, 2, function(par_estimate) {

quantile(par_estimate, probs = c(0.025, 0.975))

})

PARAMS_NAMES <- paste0(rep("lambda", m), 1:m)

# Get row and column indices for gamma instead of the default

# columnwise index: the default indices are 1:m for the 1st column,

# then (m + 1):(2 * m) for the 2nd, etc...

for (gamma_idx in 1:m ^ 2) {

row <- (gamma_idx - 1) %% m + 1

col <- (gamma_idx - 1) %/% m + 1

row_col_idx <- c(row, col)

PARAMS_NAMES <- c(PARAMS_NAMES,

paste0("gamma",

paste0(row_col_idx, collapse = "")))

}

PARAMS_NAMES <- c(PARAMS_NAMES,

paste0(rep("delta", m), 1:m))

bootstrap_CI <- data.frame("Parameter" = PARAMS_NAMES,

"Estimate" = c(lambda, gamma, delta),

"Lower bound" = q[1, ],

"Upper bound" = q[2, ])

print(bootstrap_CI, row.names = FALSE)It should be noted that some bootstrap estimates can be very large or very small. One possible reason is that the randomly generated bootstrap sample might contain long chains of the same values, thus causing some probabilities in the TPM to be near the boundary 0 or 1. However, a large number of bootstrap samples lowers that risk since we retrieve a 95% CI.

It is important for the bootstrap procedure to take into account the fact that estimates may not necessarily all be in the same order. For example, the first bootstrap may evaluate \((\lambda_1, \lambda_2) = (1.1, 3.1)\) while the second may evaluate to \((\lambda_1, \lambda_2) = (3.11, 1.11)\). Since we are looking to aggregate these estimates in order to derive CI through their 95% quantiles, it is necessary to impose an order on these estimates, to avoid grouping some \(\lambda_1\) with some \(\lambda_2\).

The first that comes to mind is the ascending order. To ensure that \(\hat{{\boldsymbol\lambda}}\) are kept in ascending order, we refer to our Section Label Switching for a solution.